Here at Spyglass, we are working on a project that includes migrating a Power Pages site to a new tenant. The process involves not only moving the Power Pages configuration data/code that all lives within Dataverse, but also custom table data with over 100,000 rows in multiple tables.

To migrate the data between tenants, we considered the following options:

- Dataverse Dataflows

- Data Migration Tools:

- Microsoft’s Configuration Migration tool

- XrmToolbox Tools

- Azure DevOps

Dataflows

It is possible to create dataflows that connect to different tenants. This is the most direct and fastest method to migrate the data between tenants, and it is useful when you need to provide transformation rules to the data before it is migrated.

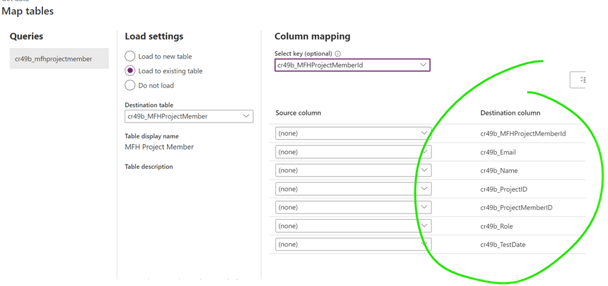

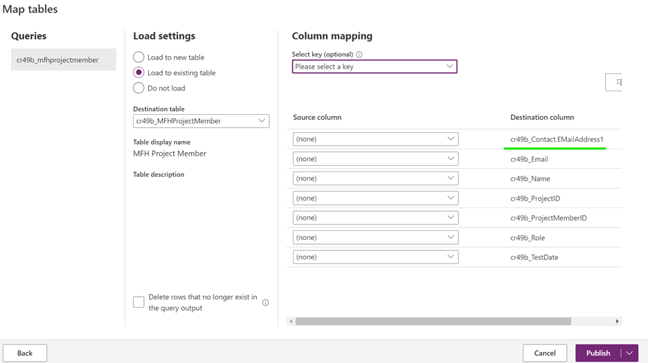

Dataflows do not migrate lookup fields by the source primary key GUID field but require an alternate key. Thus, for relationships to migrate, you need to configure an alternate key in each migrated table that has lookup columns in related tables. You will encounter this when you try to map your source data to the destination table with lookup fields. Note in the mapping below, the Contact field, a lookup field, is missing from the mapping selections because the Contact table has no alternate key. There will not be any lookup fields that do not have an alternate key shown.

Once you establish an alternate key in the table, that will show up in the list of fields as the one to map to. This is shown below after making the email address the “alternate key” in the Contacts table:

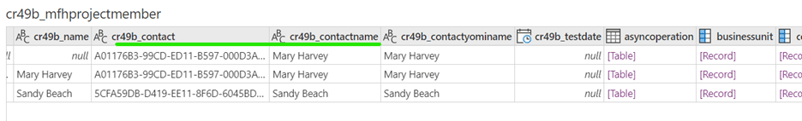

The natural alternate key to use is the Primary Name field if that is unique. The Dataflow Power Query against any of the tables with lookup columns will include the name field as well as the primary key GUID for the table. Below is the Power Query for a Dataverse table as its source that has a lookup to the Contact table. You can see that there is both the Contact ID field (cr49b_contact) as well as the name field (cr49b_contactname). Both columns will be available for any lookup field or choice field as well.

Thus, the name field is automatically available in your query in addition to the guide for mapping to a destination table name column that is used as an alternate key.

Note that the Primary Name field is not required to be unique in Dataverse tables, and many implementations do not plan for this eventuality, so the Primary Name field contains too many duplicates to use as an alternate key.

Finally, dataflows do not support the migration of many to many relationships. For our migration, the many to many relationships needed to be migrated to an alternate solution.

Data Migration Tools

For alternative migration tools, Microsoft provides its Configuration Migration tool. There are also two Data migration libraries available within the XrmToolbox, an open source Windows utility with multiple tools for working with Dataverse.

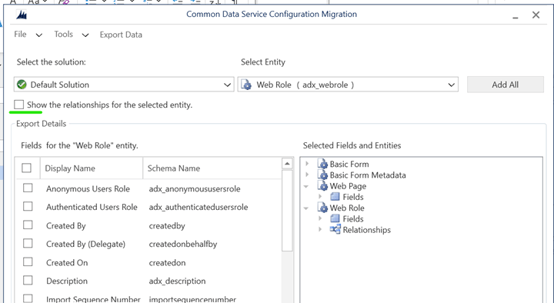

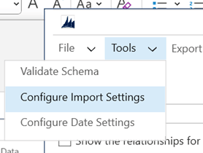

Microsoft Configuration Migration Tool

The Microsoft Configuration Migration tool was originally intended to migrate Dynamics 365 portal configuration data between environments, but it can also be used to migrate custom data. It will migrate the GUID primary keys and preserve the relationships between tables. It can also be used to migrate the many to many relationships defined in Dataverse. However, the process will take longer than using dataflows since the data first needs to be exported and then imported. Also, there could be a limitation to the number of rows exported at any time due to the size of the XML file generated to hold the exported data. There is also no ability to map the data to new columns in the destination schema. The migration tool is capable of doing both inserts and upserts to update existing records. This is the tool we used to migrate the data between the tenants due to the many to many relationships that needed to be migrated.

The migration process consists of three steps:

- Define an export schema that determines what is migrated

- Exporting the data from the source Dataverse environment

- Importing the data to the destination environment

The schema file defines the tables to be migrated, the fields and rows within those tables to be migrated, and many to many relationships that should be included in the migration.

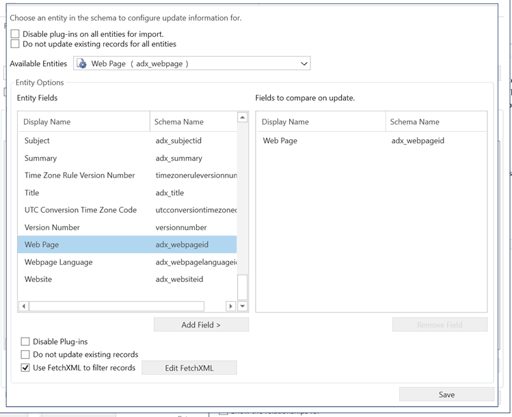

If your Primary Name field is not unique, it is critical to set primary key GUID as the matching identifier for upserts in the Import Settings because this tool will use the name field by default. The Import Settings are also where a FetchXml[1] statement can be defined to filter for the records to be migrated.

[1] FetchXml is a query language similar to SQL that can be used to define queries against Dataverse

XrmToolBox

The XrmToolBox is an excellent utility for anyone working with Dataverse data. It has an extensive list of tools that can be installed on top of the base utility. There are currently three tools that can be used for data migration that operate in a similar manner to the Configuration Migration tool described above but with a different UI:

- CDS Data Migrator

- Data Migration Tool

- Data Migration using FetchXml

If you are already familiar with XrmToolbox, these tools can be used similarly to the Configuration Migration tool defined above. Note that like the Configuration Migration tool, there is no way to map to different destination columns. These tools are intended to migrate into a matching schema to the source environment.

Azure DevOps

Lastly, Azure DevOps has introduced Pipeline actions that are able to use a schema file as defined above for the Configuration Migration tool to Export data from a Dataverse environment and import it to a new environment. Azure DevOps uses either a Service Principal or App Registration with Client ID and Secret to connect to a Dataverse environment. That S2S user does not need any specific API permissions other than basic User, but it does need to be granted Admin Consent and added with System Administrator rights to any Power Platform environment that it needs to connect to. That can be in the same tenant or a different one.

Using these actions in Azure DevOps pipelines is excellent for automated deployments between development lifecycle environments (Dev, QA, Prod) in addition to deploying solutions that do not contain custom data.

While using Azure DevOps will keep the migrated data in the cloud, it is not as practical for a one-time tenant to tenant migration of many records to store such large data files in Azure DevOps.

We hope that you found this blog post on Migrating Dataverse Data Between Tenants insightful. If you have any further questions, please feel free to contact us!