Large Language Models (LLMs) can be used for a variety of tasks, such as translation, summarization, question answering, and more. Their versatility is powerful but requires a careful balance of resources, memory, and tools. Solving this from scratch creates extra overhead and can produce technical debt that may be difficult to overcome down the road. To avoid this, LLM frameworks are being created. These frameworks are designed to simplify the process of building an application. Incorporating an LLM framework into your AI orchestration layer can accelerate development and provide flexibility for integrating a variety of services.

Every orchestra needs a conductor. With the rise of LLM frameworks, there are two "conductors" that stand out: Langchain and Semantic Kernel. Both open-source frameworks provide powerful tools for integrating LLMs into applications, but each have their unique features and use cases.

Features

Langchain is a powerful framework that simplifies the process of building advanced language model applications. It supports models from prominent AI platforms like OpenAI, making it a solid foundation for creating powerful, language-driven applications. Langchain is a modular framework that supports Python and JavaScript/TypeScript for simplifying the development process.

Complex tasks run through a sequence of components to generate the final response.

|

Step |

Component |

Description |

|

1 |

Model I/O |

Interface with language models |

|

2 |

Retrieval |

Interface with application-specific data |

|

3 |

Chains |

Construct sequences of calls |

|

4 |

Agents |

Let chains choose which tools to use given high-level directives |

|

5 |

Memory |

Persist application state between runs of a chain |

|

6 |

Response |

Output of results |

Semantic Kernel is a lightweight open-source SDK that allows developers to easily run LLM models from Azure OpenAI with conventional programming languages like C# and Python. Semantic Kernel provides a simple programming model allowing developers to add custom plugins that can be chained together, and it will automatically orchestrate the proper execution plan to achieve the user’s requests.

Semantic Kernel follows a similar pipeline pattern.

|

Step |

Component |

Description |

|

1 |

Kernel |

The kernel orchestrates a user’s tasks |

|

2 |

Memories |

Recall and store context |

|

3 |

Planner |

Mix and match plugins to execute |

|

4 |

Connectors |

Integration to external data sources |

|

5 |

Plugins |

Create custom C# or Python native functions |

|

6 |

Response |

Output of results |

Use Cases

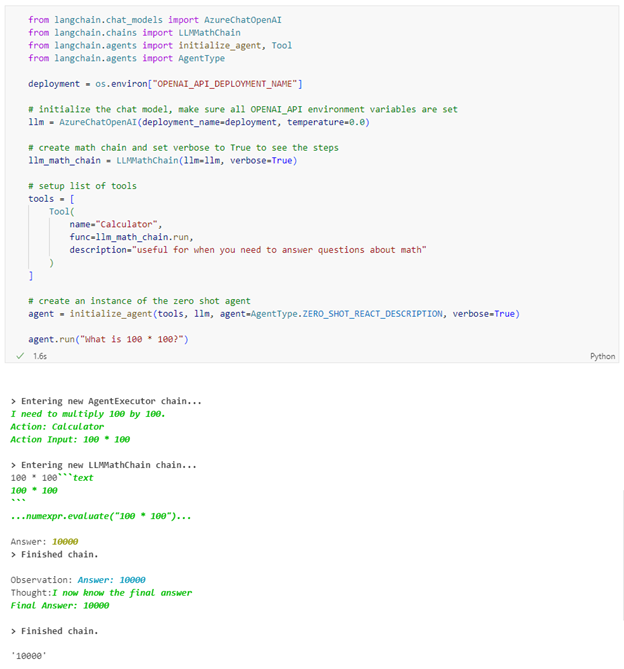

Langchain can be used for a variety of tasks, and tools are one way of adding another layer of functionality. Tools can be added to agents based on what tasks are required for the job. In this example, a calculator tool is added when initializing a zero-shot agent. Then, the question is asked, “What is 100 * 100?” A zero-shot agent only responds to the current prompt and does not consider any previous chat history.

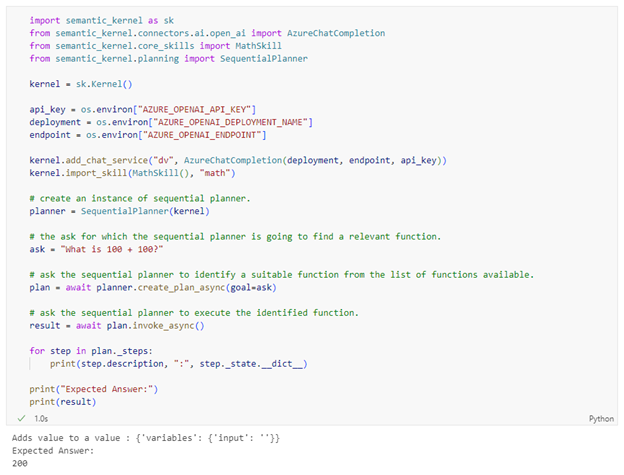

Semantic Kernel is similar to Langchain but refers to tools as skills. Instead of agents, Semantic Kernel has planners. For this example, the question needed to be altered since the math skill only supports addition and subtraction.

Conclusion

Both Langchain and Semantic Kernel provide powerful tools for large language model applications. While they have some similarities, they each have their unique features and use cases. Langchain is a powerful framework built around Python and JavaScript, and it has more out of the box tools and integrations. Semantic Kernel is more lightweight, and while both support Python, it also includes C#. Both technologies have a wide range of use cases, making them versatile tools for developers. Whether you choose Langchain or Semantic Kernel will depend on the language your team supports and what features and integrations are included out of the box. If you want to discuss this further or have any questions, please contact us!